One of the challenges of designing a service is understanding the Quality of Service (QoS) both in terms of what it is, and importantly in how it is measured. The point about QoS is that it should be from the

perspective of the consumer rather than from the producer. Historically people have ignored pieces like the network connectivity and latency but this is rapidly becoming a nonsense as people look at cloud computing and SaaS as standard parts of an IT infrastructure.

To use an analogy. I live about 30 miles or so from the office in Soho, Mike Morris lives in south london about 10 miles away. Now to open the door to get into the office takes about 10 seconds, and historically this is the SLA that would be given and the QoS would be measured from the point of standing outside the door to inside, a whole metre.

Now both of us are on train networks within walking distance at both ends, myself on the overground system, Mike on the underground. So in other words we both have network connectivity to the office.

Now here is the point, despite the fact that I live significantly farther away than Mike it takes us about the same time door-to-door because my train moves miles faster and stops much less than his underground train. This means from a QoS perspective our

normal QoS is pretty much the same. This is where most people stop if they bother at all, measuring the end-to-end normal performance. However to really understand QoS and especially in a more critical area its important to look at what happens when things go wrong.

For Mike he has a couple of alternative routes if the main one fails, these take a bit longer (lets say 20-30% longer) and in the worst case scenario he could walk there in about 2 hours (a 120% increase), this means that from a disaster recovery perspective its not actually something that is over-worrying from a QoS perspective, its like switching from a dedicated commercial internet connection to ADSL or at worst to dialup, you can still get there but the performance sucks.

For me however we are in a different world of hurt, there are no alternative train routes so it would be either car (lets say at a 150% increase) or if that was buggered, for instance in the recent snow, then walking would take an impressive 11 hours or in other words it wouldn't work at all. Therefore I need an alternative solution, this comes in the form of home working where I have a duplication of the office environment (power, light, internet connectivity) and some additional software (VPN) to ensure I can continue working.

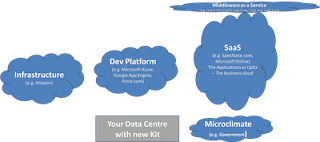

This is the point of QoS when looking at Cloud and SaaS and distributed SOA solutions. You've got to think about what happens if the main network routes fail, are there alternatives, can you put in an ISDN line for emergencies? What if the connection really is down and it can't be established. Can you have a local cache that will support minimal working? Can you degrade so you don't need to use that service?

The point about QoS is that you need to look at the failure conditions of the whole network not simply the last inch. Many of these elements might be out of your control and therefore you need to push back the QoS

onto the consumer to make sure they are informed about what is their responsibility and what you will guarentee. This is what the cloud and SaaS providers do, but if your job is to ensure the business can use those solutions then you need to be looking at that end-to-end element and concentrating on the connectivity and caching more than thinking "the internet is always up and its always performant".

SouthEastern trains provide me with a great QoS on a good day and complete rubbish on rather a lot of days, if I was using a SaaS solution where the network issues were similar then my perception would be that it is the SaaS solution that is rubbish.

Perception is everything when rolling out new technologies and QoS is the rigour required to make sure the perception is positive.

Technorati Tags: SOA, Service Architecture